Comparing top PaaS and deployment providers

Many solutions today let developers deploy and manage applications while abstracting away the complexities of infrastructure management.

That said, each platform offers distinct approaches to deployment, resource management, scaling, and pricing, which will shape your workflow and operational costs.

This guide compares the following providers:

With this comparison at hand, you’ll be able to make an informed decision on which platform best suits your needs. Here's a high-level summary comparing the platforms.

Legend

- ✅ Full support

- ⚠️ Partial support or requires workarounds

- ❌ Not supported

| Feature | Vercel | Railway | Render | Fly | DigitalOcean | Heroku |

| DEPLOYMENT | ||||||

| Deployment Model | Serverless functions | Long-running servers | Long-running servers | Lightweight VMs | Long-running servers | Long-running servers |

| Docker Support | ❌ No | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Source Code Deploy | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Multi-Service Projects | ❌ No (one-to-one) | ✅ Yes | ✅ Yes | ❌ No | ✅ Yes | ❌ No |

| INFRASTRUCTURE | ||||||

| Runs On | AWS (serverless) | Own hardware | AWS/GCP | Own hardware | Own hardware | AWS |

| Max Memory | 4GB | Plan-based | Instance-based | Configurable | Instance-based | Instance-based |

| Execution Limits | 13.3 min max | None | None | None | None | None |

| Cold Starts | Yes | No | No | No | No | No |

| Persistent Storage via volumes | ❌ No | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No | ❌ No |

| DATABASES & STORAGE | ||||||

| Database Support | Via marketplace | ✅ One-click deploy any open-source database | ✅ Native | ✅ Native | ✅ Native | ✅ Native (via add-ons) |

| SCALING | ||||||

| Vertical AutoScaling | ✅ Automatic | ✅ Automatic | ⚠️ Manual/threshold | ⚠️ Manual/threshold | ⚠️ Manual/threshold | ⚠️ Manual/threshold |

| Horizontal Scaling | ✅ Yes | ✅ Yes By deploying replicas | ✅ Yes Configure min and max number of concurrent instances | ✅ Yes By deploying fly-autoscaler | ✅ Yes Configure min and max number of concurrent instances | ✅ Yes Configure min and max number of concurrent instances |

| Multi-Region Support | ✅ Yes | ✅ Native | ❌ No (requires manual setup) | ✅ Native | ❌ No (requires manual setup) | ❌ No (requires manual setup) |

| PRICING | ||||||

| Pricing Model | Usage-based Active compute time + resources used | Usage-based Active compute time + resources used | Instance-based | Machine state-based | Instance-based | Instance-based |

| Billing Factors | CPU time + memory + invocations | Active compute time × size | Fixed monthly per instance. When scaling horizontally it’s instance size x total running time | Running time + CPU type | Fixed monthly per instance | Fixed monthly per instance |

| Scales to Zero | ✅ Yes | ✅ Supported via app sleeping | ❌ No | ✅ Supported via autostop | ❌ No | ❌ No |

| CI/CD & ENVIRONMENTS | ||||||

| GitHub Integration | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| PR Preview Environments | ✅ Yes | ✅ Yes | ✅ Yes | ⚠️ Not supported out of the box. Requires setting up a CI/CD pipeline | ❌ No | ✅ Yes |

| Environment Support | ✅ Built-in | ✅ Built-in | ✅ Built-in | ⚠️ Separate orgs | ⚠️ Separate projects | ✅ Built-in |

| Instant Rollbacks | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No | ✅ Yes | ✅ Yes |

| Pre-Deploy Commands | ✅ Yes | ✅ Yes | ✅ Yes | ⚠️ Manual when setting up a deployment pipeline | ✅ Yes | ✅ Yes |

| OBSERVABILITY | ||||||

| Built-in Monitoring | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes (Prometheus) | ✅ Yes | ✅ Yes |

| Integrated Logs | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| DEVELOPER TOOLS | ||||||

| Infrastructure as Code | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| CLI Support | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| SSH Access | ❌ No | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Webhooks | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No | ❌ No | ✅ Yes |

| NETWORKING | ||||||

| Custom Domains | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Wildcard Domains | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No |

| Managed TLS | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Private Networking | ❌ No | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ⚠️ Paid add-on |

| Health Checks | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| ADDITIONAL FEATURES | ||||||

| Native Support for Cron Jobs | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No | ❌ No | ✅ Yes |

| Shared Variables | ⚠️ Within project | ✅ Yes | ✅ Yes | ⚠️ Manual | ✅ Yes | ❌ No |

vercel.com

Vercel makes it possible to deploy web applications and static sites while abstracting away infrastructure management and scaling.

Vercel has developed a proprietary deployment model where infrastructure components are derived from the application code through a concept called Framework-defined infrastructure. At build time, application code is parsed and translated into the necessary infrastructure components. Server-side code is then deployed as serverless functions. Note that Vercel does not support the deployment of Docker images or containers.

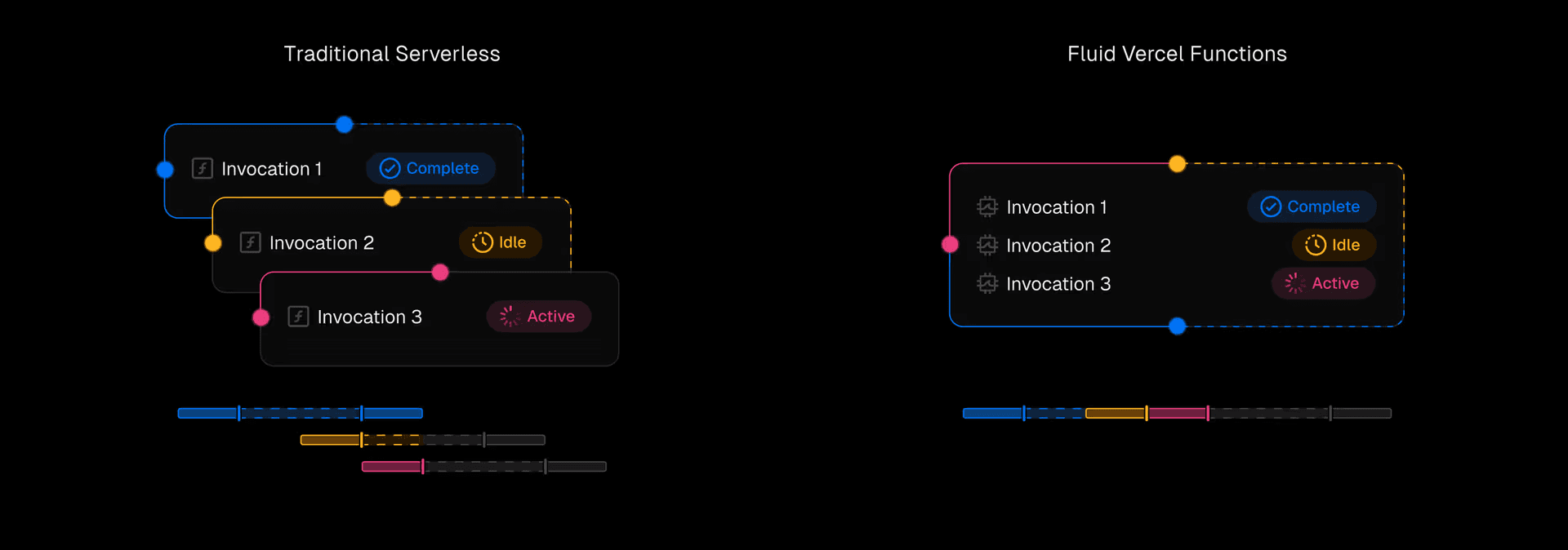

To handle scaling, Vercel creates a new function instance for each incoming request with support for concurrent execution within the same instance through their Fluid compute system. Over time, functions scale down to zero to save on compute resources.

Vercel Fluid Compute

Vercel functions are billed based on three primary factors:

- Active CPU: Time your code actively runs in milliseconds

- Provisioned memory: Memory held by the function instance, for the full lifetime of the instance

- Invocations: number of function requests, where you’re billed per request

Each pricing plan includes a certain allocation of these metrics. This makes it possible for you to pay for what you use. However, since Vercel runs on AWS, the unit economics of the business need to be high to offset the cost of the underlying infrastructure. Those extra costs are then passed down to you as the user, so you end up paying extra for resources such as bandwidth, memory, CPU and storage.

In Vercel, a project maps to a deployed application. If you would like to deploy multiple apps, you’ll do it by creating multiple projects. This one-to-one mapping can complicate architectures with multiple services.

Vercel Dashboard

Vercel also includes support for built-in observability and monitoring

observability

There’s also support for automated preview environments for every pull request.

Vercel PR bot

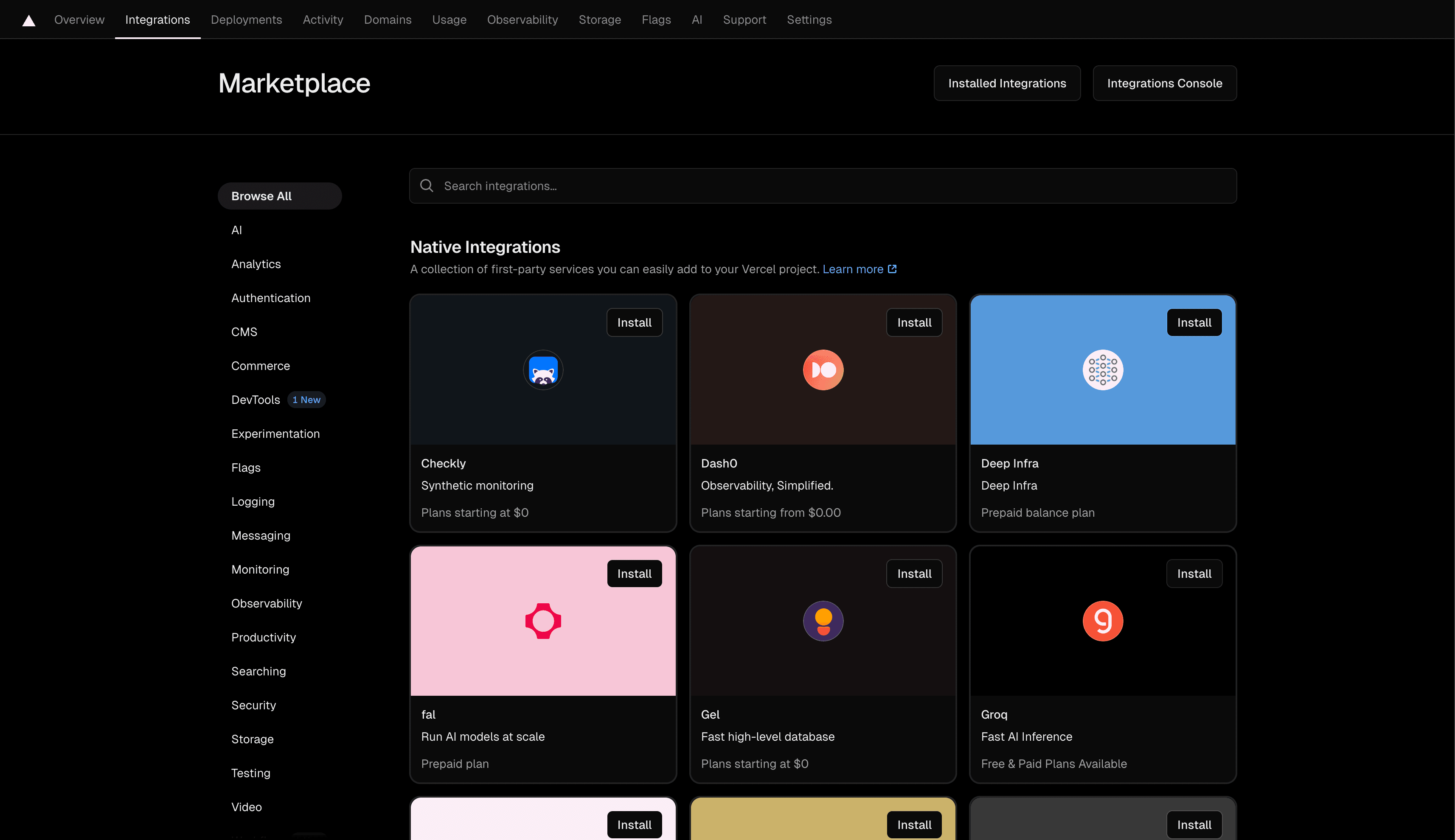

If you would like to integrate your app with other infrastructure primitives (e.g storage solutions for your application’s database, caching, analytical storage, etc.), you can do it through the Vercel marketplace. This gives you an integrated billing experience, however managing services is still done by accessing the original service provider. Making it necessary to switch back and forth between different dashboards when you’re building your app.

Vercel Marketplace

This serverless deployment model abstracts away infrastructure, but introduces significant limitations:

- Memory limits: the maximum amount of memory per function is 4GB

- Execution time limit: the maximum amount of time a function can run is 800 seconds (~13.3 minutes)

- Size (after gzip compression): the maximum is 250 MB

- Cold starts: when a function instance is created for the first time, there’s an amount of added latency. Vercel includes several optimizations including bytecode caching, which reduces cold start frequency but won’t completely eliminate them

If you plan on running long-running workloads, Vercel functions will not be the right fit. This includes:

- Data Processing: ETL jobs, large file imports/exports, analytics aggregation

- Media Processing: Video/audio transcoding, image resizing, thumbnail generation

- Report Generation: Creating large PDFs, financial reports, user summaries

- DevOps/Infrastructure: Backups, CI/CD tasks, server provisioning

- Billing & Finance: Usage calculation, invoice generation, payment retries

- User Operations: Account deletion, data merging, stat recalculations

Similarly, workloads that require a persistent connection are incompatible:

- Chat messaging: Live chats, typing indicators

- Live dashboards: Metrics, analytics, stock tickers

- Collaboration: Document editing, presence

- Live tracking: Delivery location updates

- Push notifications: Instant alerts

- Voice/video calls: Signaling, status updates

railway.com

Railway enables you to deploy applications to long-running servers, making it ideal for applications that need to stay running or maintain a persistent connection. You can deploy your apps as services from a Docker image or by importing your source code.

Railway’s dashboard offers a real-time collaborative canvas where you can view all of your running services and databases at a glance. Projects contain multiple services and databases (frontend, APIs, workers, databases, queues). You can group the different infrastructure components and visualize how they’re related to one another.

Deploy everything in one place

You also have programmatic control over your resources through Infrastructure-as-Code (IaC) definitions and a command-line interface.

You can connect your GitHub repository to enable automatic builds and deployments whenever you push code, and create isolated preview environments for every pull request.

Create environment

If issues arise, you can revert your app to previous versions. Railway’s integrated build pipeline supports pre-deploy commands, and you can run arbitrary commands against deployed services via SSH.

When it comes to observability, Railway has integrated metrics and logs help you track application performance.

Observability Dashboard

Finally, Railway supports networking features like public and private networking, custom domains with managed TLS as well as wildcard domains.

Railway has first-class support for Databases. You can one-click deploy any open-source database:

- Relational: Postgres, MySQL

- Analytical: ClickHouse, Timescale

- Key-value: Redis, Dragonfly

- Vector: Chroma, Weaviate

- Document: MongoDB

Check out all of the different storage solutions you can deploy.

Railway automatically scales compute resources based on workload without manual threshold configuration. Each plan has defined CPU and memory limits.

You can scale horizontally by deploying multiple replicas of your service. Railway automatically distributes public traffic randomly across replicas within each region. Each replica runs with the full resource limits of your plan.

Creating replicas for horizontal scaling

Replicas can be placed in different geographical locations. The platform automatically routes public traffic to the nearest region, then randomly distributes requests among the available replicas within that region.

Finally, if you would to save on compute resources, you can enable app sleeping, which suspends a running service after 10 mins of inactivity. Services will then become active on incoming requests.

Railway follows a usage-based pricing model that depends on how long your service runs and the amount of resources it consumes.

Active compute time x compute size (memory and CPU)

Railway autoscaling

If you spin up multiple replicas for a given service, you’ll only be charged for the active compute time for each replica.

Railway's underlying infrastructure runs on hardware that’s owned and operated in data centers across the globe. By controlling the hardware, software, and networking stack end to end, the platform delivers best-in-class performance, reliability, and powerful features, all while keeping costs in check.

Render is similar to Railway in the following aspects

- You can deploy your app from a Docker image or by importing your app’s source code from GitHub.

- Multi-service architecture where you can deploy different services under one project (e.g. a frontend, APIs, databases, etc.).

- Services are deployed to a long-running server.

- Services can have persistent storage via volumes.

- Public and private networking are included out-of-the-box.

- Healthchecks are available to guarantee zero-downtime deployments.

- Connect your GitHub repository for automatic builds and deployments on code pushes.

- Create isolated preview environments for every pull request.

- Support for instant rollbacks.

- Integrated metrics and logs.

- Define Infrastructure-as-Code (IaC).

- Command-line-interface (CLI) to manage resources.

- Integrated build pipeline with the ability to define pre-deploy command.

- Support for wildcard domains.

- Custom domains with fully managed TLS.

- Schedule tasks with cron jobs.

- Run arbitrary commands against deployed services (SSH).

- Shared environment variables across services.

That said, there are some differences between the platforms that might make Railway a better fit for you.

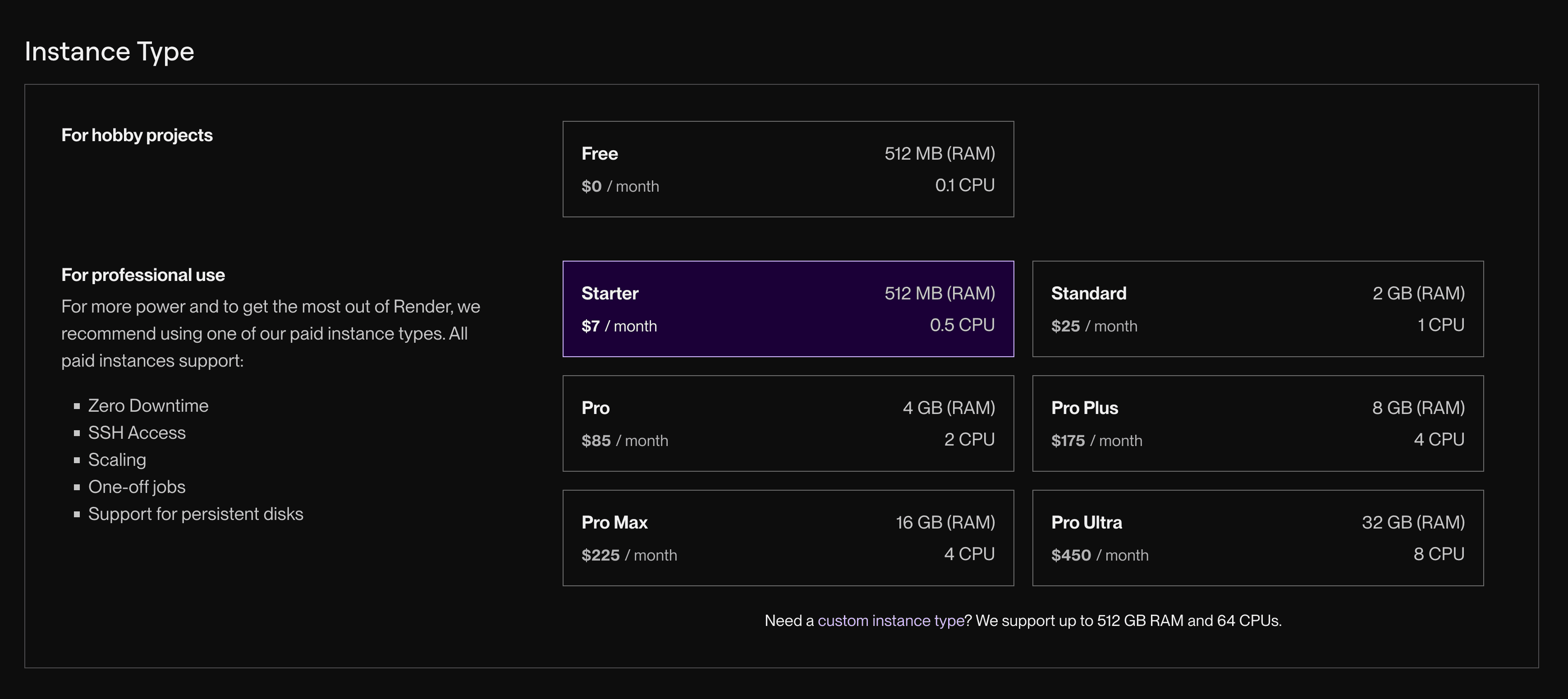

Render follows a traditional, instance-based model. Each instance has a set of allocated compute resources (memory and CPU).

In the scenario where your deployed service needs more resources, you can either scale:

- Vertically: you will need to manually upgrade to a large instance size to unlock more compute resources.

- Horizontally: your workload will be distributed across multiple running instances. You can either:

- Manually specify the machine count.

- Autoscale by defining a minimum and maximum instance count. The number of running instances will increase/decrease based on a target CPU and/or memory utilization you specify.

While this approach covers scaling within a single region, Render does not offer native multi-region support. To achieve a globally distributed deployment, you must provision separate instances in different regions and set up an external load balancer to route traffic between them.

The main drawback of this setup is that it requires manual developer intervention. Either by:

- Manually changing instance sizes/running instance count.

- Manually adjusting thresholds because you can get into situations where your service scales up for spikes but doesn’t scale down quickly enough, leaving you paying for unused resources.

Render follows a traditional, instance-based pricing. You select the amount of compute resources you need from a list of instance sizes where each one has a fixed monthly price.

Render Instances

While this model gives you predictable pricing, the main drawback is you end up in one of two situations:

- Under-provisioning: your deployed service doesn’t have enough compute resources which will lead to failed requests.

- Over-provisioning: your deployed service will have extra unused resources that you’re overpaying for every month.

Enabling horizontal autoscaling can help with optimizing costs, but the trade-off will be needing to figure out the right amount of thresholds instead.

Additionally, Render runs on AWS and GCP, so the unit economics of the business need to be high to offset the cost of the underlying infrastructure. Those extra costs are then passed down to you as the user, so you end up paying extra for:

- Unlocking additional features (e.g. horizontal autoscaling and environments are only available on paid plans).

- Pay extra for resources (e.g., bandwidth, memory, CPU and storage).

- Pay for seats where each team member you invite adds a fixed monthly fee regardless of your usage.

At a high level, Fly.io is similar to Render and Railway in the following ways:

- You can deploy your app from a Docker image or by importing your app’s source code from GitHub.

- Apps are deployed to a long-running server.

- Apps can have persistent storage through volumes.

- Public and private networking are included out-of-the-box.

- Healthchecks to guarantee zero-downtime deployments.

- Connect your GitHub repository for automatic builds and deployments on code pushes.

That said, there are differences when it comes to the overall developer experience

When you deploy your app to Fly, your code runs on lightweight Virtual Machines (VMs) called Fly Machines. Each machine needs a defined amount of CPU and memory. You can either choose from preset sizes or configure them separately, depending on your app’s needs.

Machines come with two CPU types:

- Shared CPUs: 6% guaranteed CPU time with bursting capability. Subject to throttling under heavy usage.

- Performance CPUs: Dedicated CPU access without throttling.

Fly machines run on hardware that’s owned and operated in data centers across the globe, with support for multi-region deployments.

When scaling your app, you have one of two options:

- Scale a machine’s CPU and RAM: you will need to manually pick a larger instance. You can do this using the Fly CLI or API.

- Increase the number of running machines. There are two options:

- You can manually increase the number of running machines using the Fly CLI or API

- Fly can automatically adjust the number of running or created Fly Machines dynamically. Two forms of autoscaling are supported:

Scaling on Fly

Fly Pricing

Fly charges for compute based on two primary factors: machine state and CPU type (shared vs. performance).

Machine state determines the base charge structure. Started machines incur full compute charges, while stopped machines are only charged for root file system (rootfs) storage. The rootfs size depends on your OCI image plus containerd optimizations applied to the underlying file system.

Reserved compute blocks require annual upfront payment with monthly non-rolling credits.

Fly Machines charge based on running time regardless of utilization. Stopped machines only incur storage charges.

Fly provides a CLI-first experience through flyctl, allowing you to create and deploy apps, manage Machines and volumes, configure networking, and perform other infrastructure tasks directly from the command line.

However, Fly lacks built-in CI/CD capabilities. This means you can’t:

- Create isolated preview environments for every pull request

- Perform instant rollbacks

To access these features, you’ll need to integrate third-party CI/CD tools like GitHub Actions.

Similarly, Fly doesn’t include native environment support for development, staging, and production workflows. To achieve proper environment isolation, you must create separate organizations for each environment and link them to a parent organization for centralized billing management.

For monitoring, Fly automatically collects metrics from every application using a fully-managed Prometheus service based on VictoriaMetrics. The system scrapes metrics from all application instances and provides data on HTTP responses, TCP connections, memory usage, CPU performance, disk I/O, network traffic, and filesystem utilization.

The Fly dashboard includes a basic Metrics tab displaying this automatically collected data. Beyond the basic dashboard, Fly offers a managed Grafana instance at fly-metrics.net with detailed dashboards and query capabilities using MetricsQL as the querying language. You can also connect external tools through the Prometheus API.

fly-metrics.net

Alerting and custom dashboards require multiple tools and query languages. Additionally, Fly doesn’t support webhooks, making it more difficult to build integrations with external services.

At a high level, DigitalOcean App Platform is similar to Railway and Render in the following ways:

- You can deploy your app from a Docker image or by importing your app’s source code from GitHub.

- Multi-service architecture where you can deploy different services under one project (e.g. a frontend, APIs, databases, etc.).

- Services are deployed to a long-running server.

- Public and private networking are included out-of-the-box.

- Healthchecks are available to guarantee zero-downtime deployments.

- Connect your GitHub repository for automatic builds and deployments on code pushes.

- Support for instant rollbacks.

- Integrated metrics and logs.

- Define Infrastructure-as-Code (IaC).

- Command-line-interface (CLI) to manage resources.

- Integrated build pipeline with the ability to define pre-deploy command.

- Support for wildcard domains.

- Custom domains with fully managed TLS.

- Run arbitrary commands against deployed services (SSH).

- Shared environment variables across services.

Similar to Render, DigitalOcean App Platform follows a traditional, instance-based model.

Each instance has a set of allocated compute resources (memory and CPU) and runs on on hardware that’s owned and operated in data centers across the globe.

In the scenario where your deployed service needs more resources, you can either scale:

- Vertically: you will need to manually upgrade to a large instance size to unlock more compute resources.

- Horizontally: your workload will be distributed across multiple running instances. You can either:

- Manually specify the machine count.

- Autoscale by defining a minimum and maximum instance count. The number of running instances will increase/decrease based on a target CPU and/or memory utilization you specify.

While this approach covers scaling within a single region, DigitalOcean App Platform does not offer native multi-region support. To achieve a globally distributed deployment, you must provision separate instances in different regions and set up an external load balancer to route traffic between them.

Furthermore, services deployed to the platform do not offer persistent data storage. Any data written to the local filesystem is ephemeral and will be lost upon redeployment, meaning you’ll need to integrate with external storage solutions if your application requires data durability.

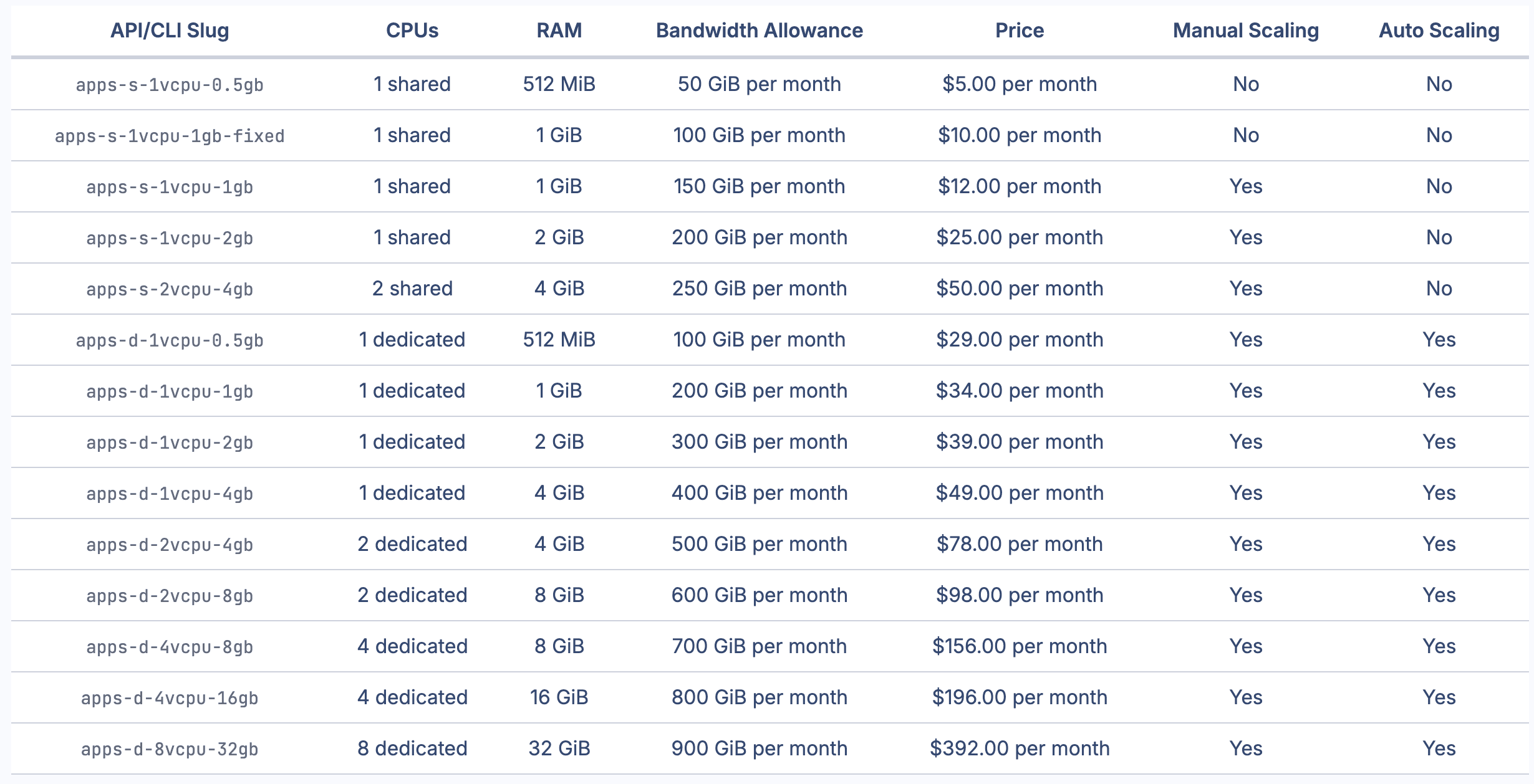

DigitalOcean Instances

DigitalOcean App Platform follows a traditional, instance-based pricing. You select the amount of compute resources you need from a list of instance sizes where each one has a fixed monthly price.

Fixed pricing results in:

- Under-provisioning: your deployed service doesn’t have enough compute resources which will lead to failed requests

- Over-provisioning: your deployed service will have extra unused resources that you’re overpaying for every month

Horizontal autoscaling requires threshold tuning.

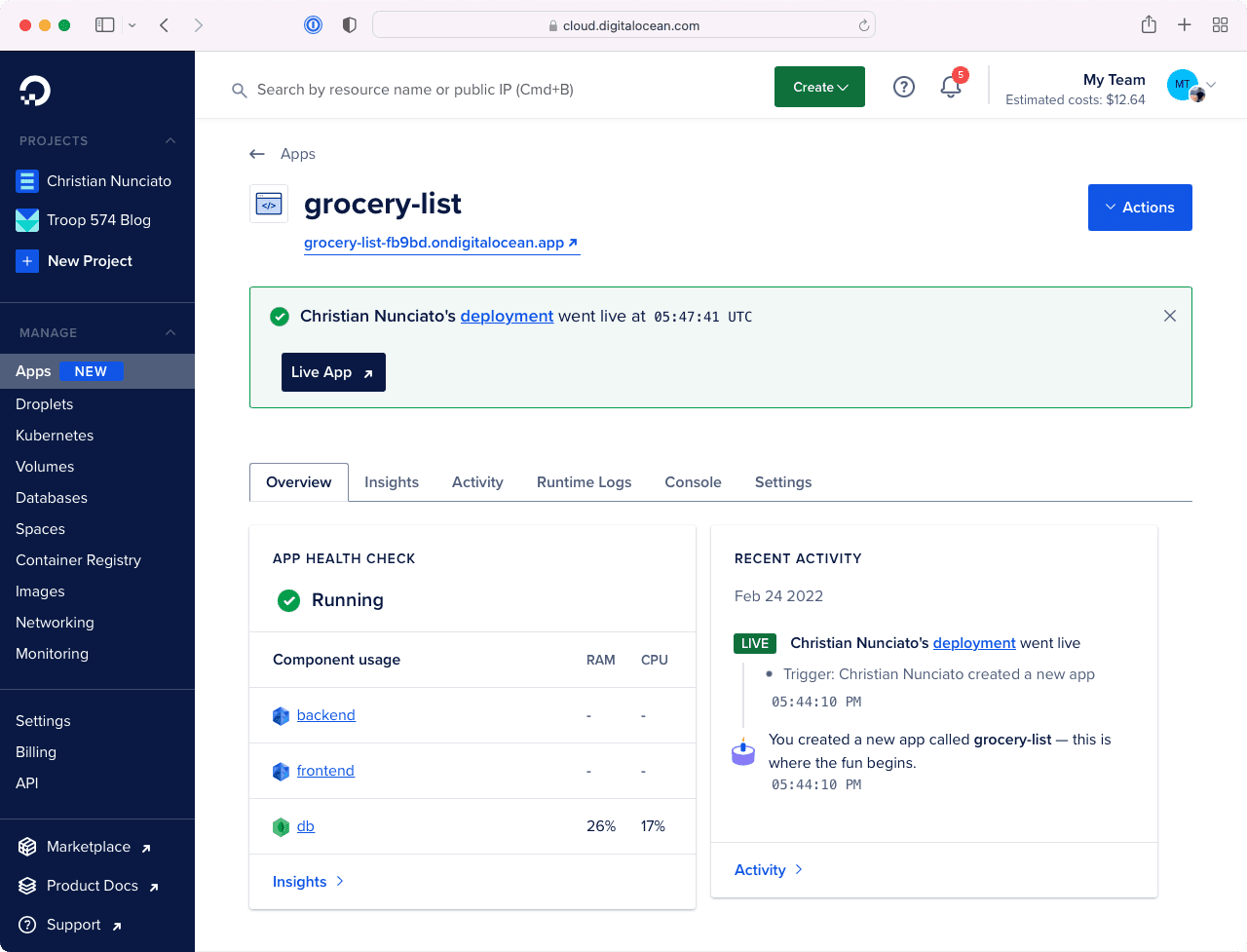

DigitalOcean App Platform’s dashboard offers a traditional dashboard where you can view all of your project’s resources.

DigitalOcean Dashboard

However, DigitalOcean App Platform lacks built-in CI/CD capabilities around environments:

- No concept of “environments” (e.g., development, staging, and production). To achieve proper environment isolation, you must create separate projects for each environment.

- No native support for automatically creating isolated preview environments for every pull request. To achieve this, you’ll need to integrate third-party CI/CD tools like GitHub Actions.

Finally, DigitalOcean App Platform doesn’t support webhooks, making it more difficult to build integrations with external services.

Heroku is similar to Railway, Render, DigitalOcean App Platform in the following ways:

- You can deploy your app from a Docker image or by importing your app’s source code from GitHub.

- Services are deployed to a long-running server.

- Connect your GitHub repository for automatic builds and deployments on code pushes.

- Create isolated preview environments for every pull request.

- Support for instant rollbacks.

- Integrated metrics and logs.

- Define Infrastructure-as-Code (IaC).

- Command-line-interface (CLI) to manage resources.

- Integrated build pipeline with the ability to define pre-deploy command.

- Custom domains with fully managed TLS.

- Run arbitrary commands against deployed services (SSH).

That said, there are some differences

Heroku follows a traditional, instance-based model. Each instance has a set of allocated compute resources (memory and CPU).

In the scenario where your deployed service needs more resources, you can either scale:

- Vertically: you will need to manually upgrade to a large instance size to unlock more compute resources

- Horizontally: your workload will be distributed across multiple running instances. You can either:

- Manually specify the machine count

- Autoscale by defining a minimum and maximum instance count. The number of running instances will increase/decrease based on a target CPU and/or memory utilization you specify

This requires manual intervention:

- Manually changing instance sizes/running instance count

- Manually adjusting thresholds because you can get into situations where your service scales up for spikes but doesn’t scale down quickly enough, leaving you paying for unused resources

Heroku lacks native multi-region support. Requires separate instances and external load balancers.

Furthermore, services deployed to the platform do not offer persistent data storage. Any data written to the local filesystem is ephemeral and will be lost upon redeployment, meaning you’ll need to integrate with external storage solutions if your application requires data durability.

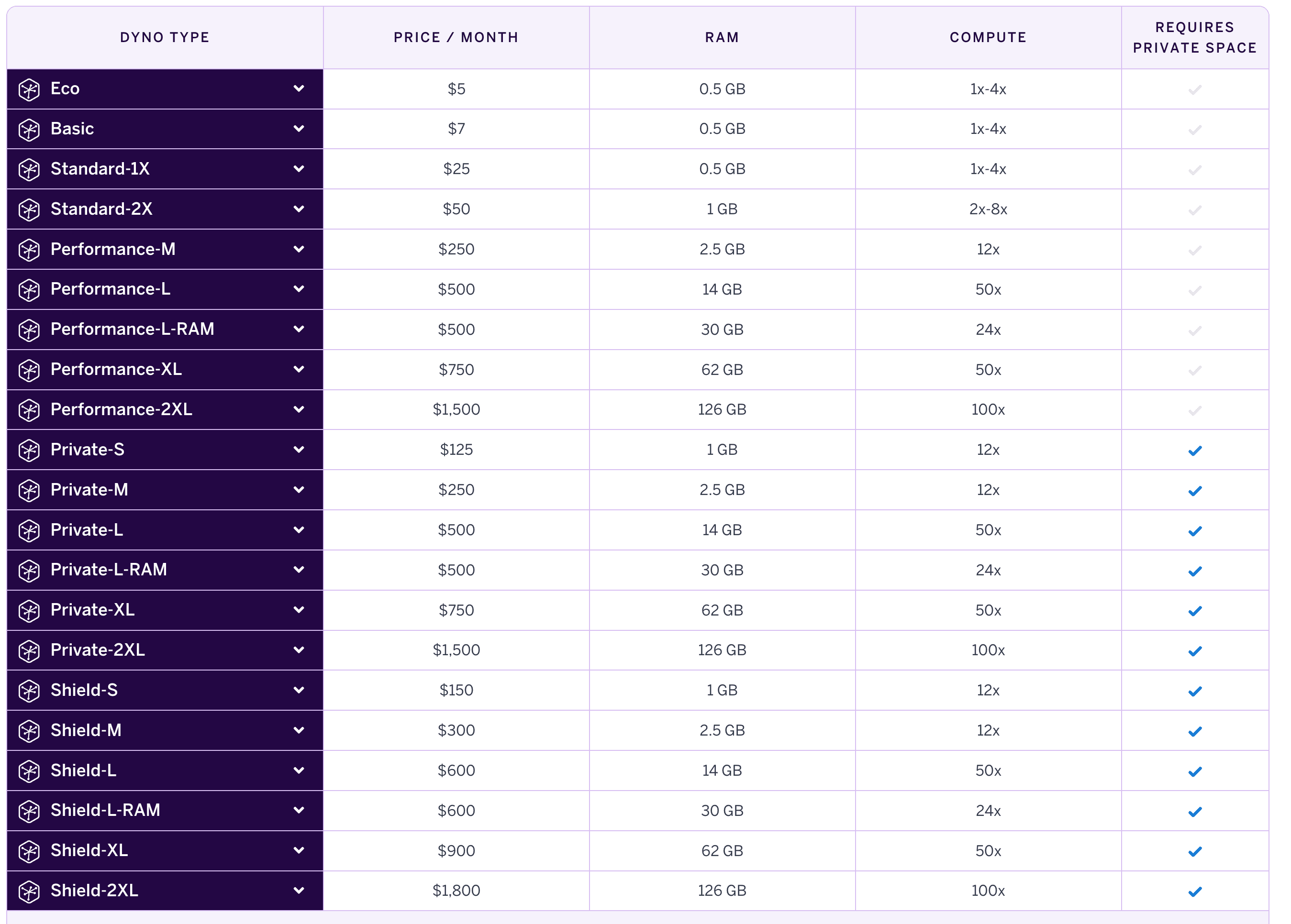

Heroku Instances

Similar to Render and DigitalOcean app platform, Heroku follows a traditional, instance-based pricing. You select the amount of compute resources you need from a list of instance sizes where each one has a fixed monthly price.

Fixed pricing results in:

- Under-provisioning: your deployed service doesn’t have enough compute resources which will lead to failed requests

- Over-provisioning: your deployed service will have extra unused resources that you’re overpaying for every month

Horizontal autoscaling requires threshold tuning.

Since Heroku runs on AWS. Additional costs include:

- Unlocking additional features (e.g. private networking is a paid enterprise add-on)

- Pay extra for resources (e.g., bandwidth, memory, CPU and storage)

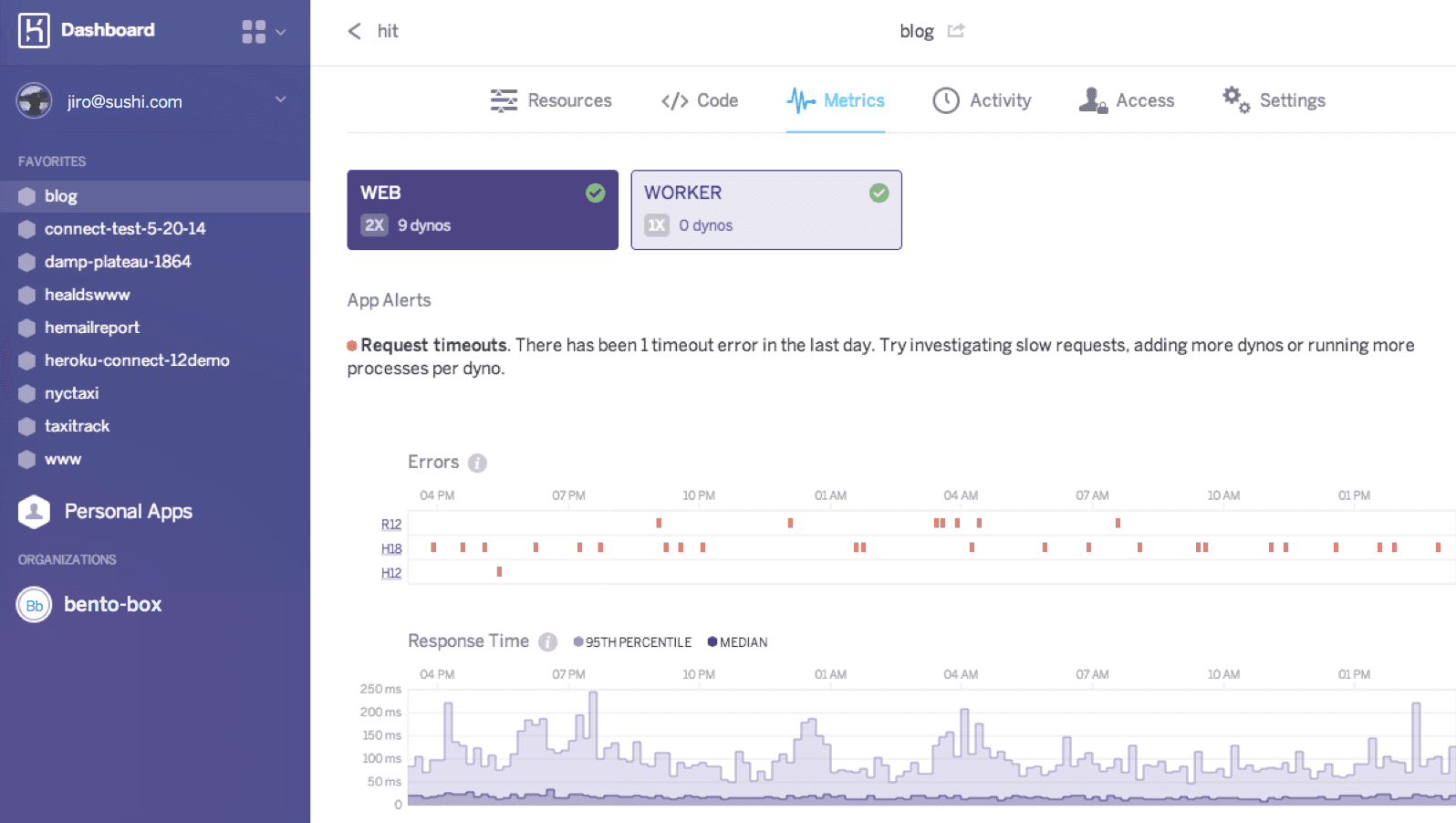

Dashboard and Organizational Structure

Heroku’s unit of deployment is the app, and each app is deployed independently. If you have different infrastructure components (e.g. API, frontend, background workers, etc.) they will be treated as independent entities. There is no top‑level “project” object that groups related apps.

Heroku Dashboard

Additionally, Heroku does not support shared environment variables across apps. Each deployed app has its own isolated set of variables, making it harder to manage secrets or config values shared across multiple services. Finally, Heroku doesn’t support wildcard domains. Each subdomain requires manual configuration.

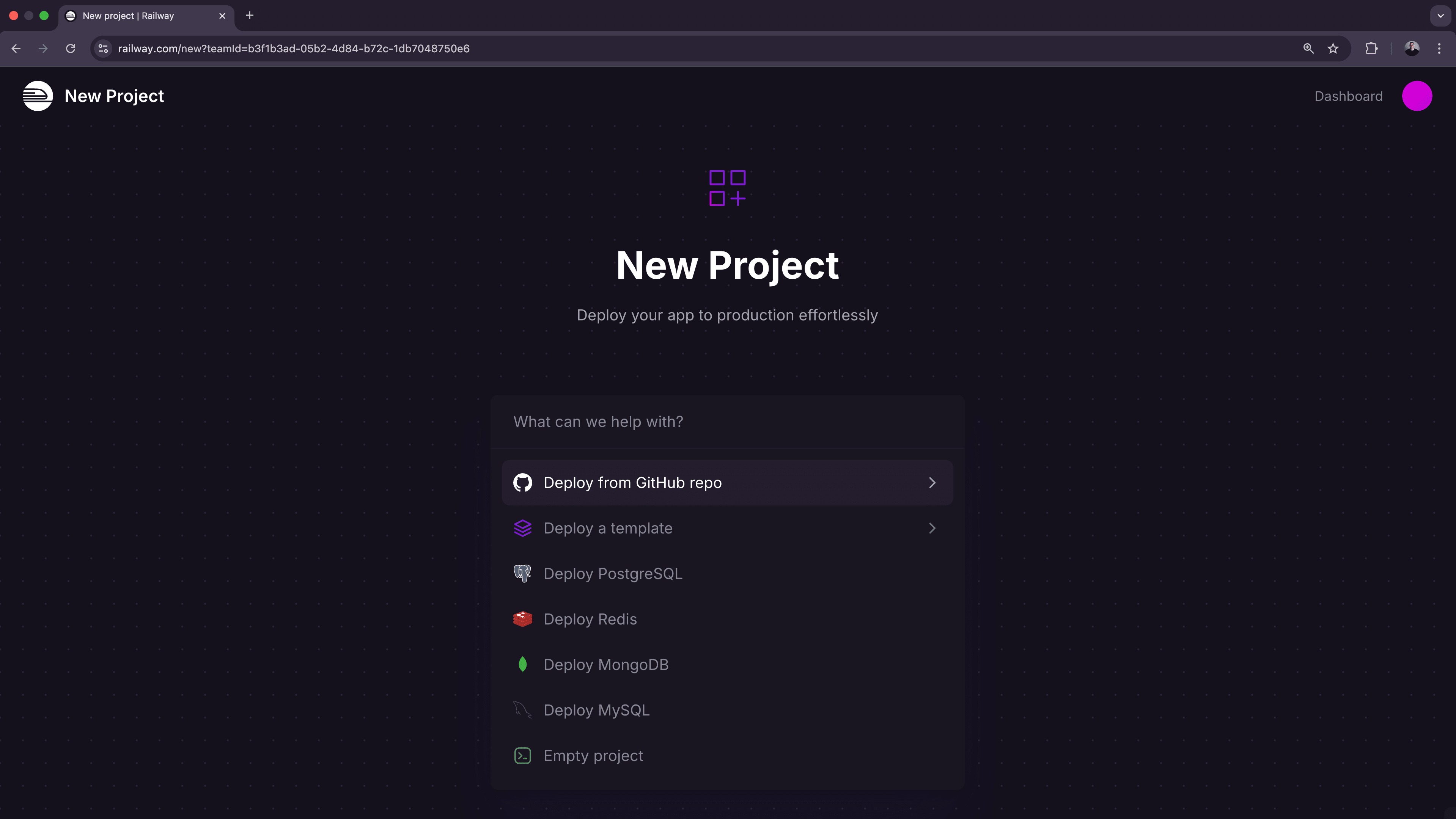

To get started, create an account on Railway. You can sign up for free and receive $5 in credits to try out the platform.

- “Choose Deploy from GitHub repo”, connect your GitHub account, and select the repo you would like to deploy.

Railway onboarding new project

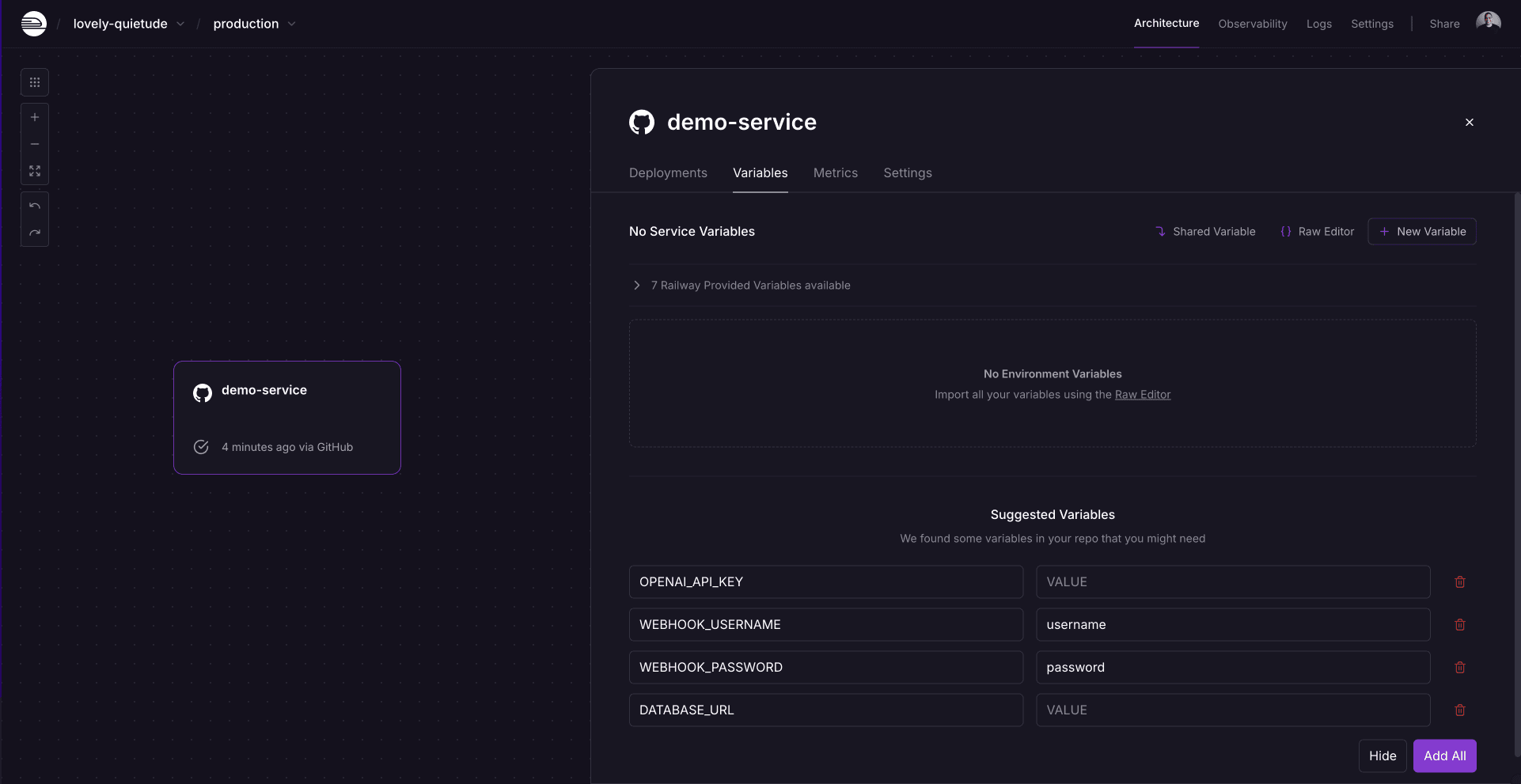

- If your project is using any environment variables or secrets:

- Click on the deployed service.

- Navigate to the “Variables” tab.

- Add a new variable by clicking the “New Variable” button. Alternatively, you can import a

.envfile by clicking “Raw Editor” and adding all variables at once.

Railway environment variables

- To make your project accessible over the internet, you will need to configure a domain:

- From the project’s canvas, click on the service you would like to configure.

- Navigate to the “Settings” tab.

- Go to the “Networking” section.

- You can either:

If you need help along the way, the Railway Discord and Help Station are great resources to get support from the team and community.

For larger workloads or specific requirements: book a call with the Railway team.